SAN Performance terms

The following definitions are frequently used to describe performance:

+ Attenuation — Loss of power specified in decibels per kilometer (dB/km).

+ Bandwidth — Also referred to as nominal channel data rate. Bandwidth can be defined as the maximum rate at which data can be transmitted and is independent of the applied load. It is sometimes expressed in terms of signaling capacity, as in SCSI, or in terms of data transmission capacity, as in Fibre Channel.

Examples

The bandwidth of an UltraSCSI bus is 320MB/s.

The bandwidth of Fibre Channel is 200MB/s.

+ Data rate — The amount of data per unit of time moved across an I/O bus in the course of executing an I/O load. The data rate varies according to the:

• Applied load

• Request size

• Read/write ratio

The data transfer rate is usually expressed as megabytes per second (MB/s).

Considering bus arbitration and protocol overheads on the Ultra Wide SCSI bus, the amount of data that can be processed is less than the rated bandwidth.

+ Bandwidth — Also referred to as nominal channel data rate. Bandwidth can be defined as the maximum rate at which data can be transmitted and is independent of the applied load. It is sometimes expressed in terms of signaling capacity, as in SCSI, or in terms of data transmission capacity, as in Fibre Channel.

Examples

The bandwidth of an UltraSCSI bus is 320MB/s.

The bandwidth of Fibre Channel is 200MB/s.

+ Data rate — The amount of data per unit of time moved across an I/O bus in the course of executing an I/O load. The data rate varies according to the:

• Applied load

• Request size

• Read/write ratio

The data transfer rate is usually expressed as megabytes per second (MB/s).

Considering bus arbitration and protocol overheads on the Ultra Wide SCSI bus, the amount of data that can be processed is less than the rated bandwidth.

Example

The data rate for a Wide Ultra SCSI bus is approximately 38MB/s.

The data rate for a Wide Ultra SCSI bus is approximately 38MB/s.

+ Request rate — The number of requests processed per second. Workloads attempt to request data at their natural rate. If this rate is not met, a queue builds up at the processor and eventually saturation results.

+ Response time — The time a device takes to process a request from issuance to completion. It is the sum of wait time and service time. Response time is the primary indication of performance and is typically expressed in milliseconds (ms).

+ Rotational speed — The performance of a hard disk, measured in rotations per minute (rpms). Rotational speed is important to the overall speed of the system — a slow hard disk can slow a fast processor. The effective speed of a hard disk is determined by several factors.

+ Response time — The time a device takes to process a request from issuance to completion. It is the sum of wait time and service time. Response time is the primary indication of performance and is typically expressed in milliseconds (ms).

+ Rotational speed — The performance of a hard disk, measured in rotations per minute (rpms). Rotational speed is important to the overall speed of the system — a slow hard disk can slow a fast processor. The effective speed of a hard disk is determined by several factors.

Disk rpm is a critical component of hard drive performance because it directly impacts the latency and the disk transfer rate. The faster the disk spins, the more data passes under the magnetic heads that read the data; the slower the rpm, the higher the mechanical latencies.

+ Seek time — The time delay associated with reading or writing data to a disk drive. To read or write data to a particular place on the disk, the read/write head of the disk that must move to the correct place. This process is known as seeking and the time it takes for the head to move to the right place is the seek time.

+ Service time — The amount of time a device needs to process a request. Service time is also known as latency and varies with request characteristics.

+ Throughput — The number of I/O requests satisfied per unit of time. Throughput is expressed in I/O requests per second, where a request is an application request to a storage subsystem to perform a read or write operation.

+ Service time — The amount of time a device needs to process a request. Service time is also known as latency and varies with request characteristics.

+ Throughput — The number of I/O requests satisfied per unit of time. Throughput is expressed in I/O requests per second, where a request is an application request to a storage subsystem to perform a read or write operation.

+ Utilization — The fraction (or percentage) of time a device is busy. Utilization depends on the service time and request rate and is expressed as a percentage, where 100% utilization is the maximum utilization.

The following detail definitions:

Most applications (90%) use I/O blocks that range from 2KB to 8KB and require a large quantity of I/Os per second. The other 10% of applications require relatively few I/Os per second, but each I/O transfers a large amount of data, for example, video editing. A SAN and its components, such as disk drives, RAID levels, and so on can be designed to maximize either I/Os per second or MB/s, and the designs will be very different in each case.

For a data rate of 2,000 I/Os per second, 20 drives must process 16.66 I/Os per second. The graph shows that this level of performance requires a bus that can handle approximately 16MB/s. Applications that use even smaller I/O sizes (2KB or 4KB) require even less bandwidth.

In this case, it represents a 10,000-rpm SCSI hard drive. A manufacturer claims its hard drive has excellent performance and can process over 150 I/Os per second

Note: Most applications require a response time of 15ms or less.

The performance gain in a given situation depends on the:

+ I/O profile

+ I/O size

+ Ratio of read to write requests

+ Frequency of reads/writes

+ RAID level

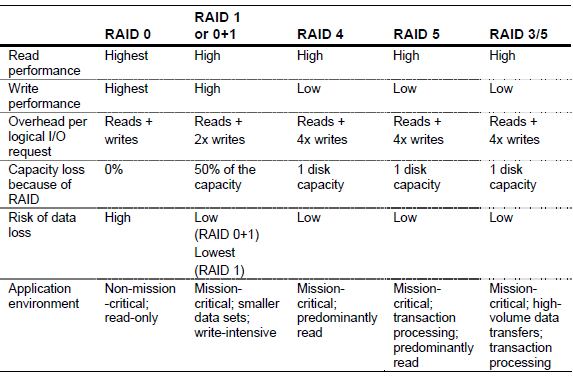

RAID level read/write comparison

RAID 0 (50% reads and 50% writes)

Assuming that all drives are 10,000rpm rated at 120 I/Os per second per drive, using five 36GB disks provides a performance of 5 x 120 I/Os per second. Total performance is therefore 600 I/Os per second. Because there is no RAID overhead with RAID 0, 600 I/Os per second are available to the application (300 reads and 300 writes).

RAID 0+1 (50% reads and 50% writes)

Using ten 36GB disks provides a performance of 10 x 120 I/Os per second. Total performance is therefore 1,200 I/Os per second. Accounting for the RAID 1 overhead, every write request at the application level translates to two writes at the disk level. Applying the ratio of reads to writes, for every read request, there are two write requests.

+ One-third of the I/Os are reads (1/3 of 1,200 I/Os = 400 I/Os for reads)

+ Two-thirds of the I/Os are writes (2/3 of 1,200 I/Os = 800 I/Os for writes)

Therefore 800 I/Os are available to the application (400 reads and 400 writes).

RAID 5 (50% reads and 50% writes):

+ Using six 36GB disks provides a performance of 6 x 120 I/Os per second. Total performance is therefore 720 I/Os per second. Accounting for RAID 5 overhead, every write operation translates into two reads and two writes at the disk level. The system reads the data that needs to be replaced, reads the parity for the stripe, creates new parity, then writes the data and the parity. Applying the ratio of reads to writes, for every read request there are four read/write requests:

+ One-fifth of the I/Os are reads (1/5 of 720 I/Os = 144 I/Os for reads)

+ Four fifths of the I/Os are read/writes (4/5 of 720 I/Os = 576 I/Os for writes)

Therefore, 288 I/Os are available to the application (144 reads and 144 writes)

Assume that an application requires 1,000 I/Os per second with a 50:50 read/write ratio.

+ Which of the following nine options would be a possible solution?

+ Which solution would require the fewest number of disks?

Example

Consider an OLTP environment with the following sample workload:

+ 1,000 users

+ Six transactions per user per minute

+ Six I/Os per transaction

+ 2KB per I/O

To determine I/Os per minute, multiply 1,000 users x 6 transactions per user per minute x 6 I/Os per transactions = 36,000 I/Os per minute.

To calculate throughput per second, divide 36,000 I/Os per minute divided by 60 seconds = 600 I/Os per second.

Assuming the data rate is 2KB I/O, then 2KB per I/O x 600 I/Os per second = 1.2MB/s performance.

Disk caching affects performance in the following areas:

+ It reduces disk access (with cache hits).

+ It reduces the negative effects of the RAID overhead.

+ It assists in disk I/O request sorting and queuing.

Note: Most applications require a response time of 15ms or less.

The performance gain in a given situation depends on the:

+ I/O profile

+ I/O size

+ Ratio of read to write requests

+ Frequency of reads/writes

+ RAID level

RAID level read/write comparison

RAID 0 (50% reads and 50% writes)

Assuming that all drives are 10,000rpm rated at 120 I/Os per second per drive, using five 36GB disks provides a performance of 5 x 120 I/Os per second. Total performance is therefore 600 I/Os per second. Because there is no RAID overhead with RAID 0, 600 I/Os per second are available to the application (300 reads and 300 writes).

RAID 0+1 (50% reads and 50% writes)

Using ten 36GB disks provides a performance of 10 x 120 I/Os per second. Total performance is therefore 1,200 I/Os per second. Accounting for the RAID 1 overhead, every write request at the application level translates to two writes at the disk level. Applying the ratio of reads to writes, for every read request, there are two write requests.

+ One-third of the I/Os are reads (1/3 of 1,200 I/Os = 400 I/Os for reads)

+ Two-thirds of the I/Os are writes (2/3 of 1,200 I/Os = 800 I/Os for writes)

Therefore 800 I/Os are available to the application (400 reads and 400 writes).

RAID 5 (50% reads and 50% writes):

+ Using six 36GB disks provides a performance of 6 x 120 I/Os per second. Total performance is therefore 720 I/Os per second. Accounting for RAID 5 overhead, every write operation translates into two reads and two writes at the disk level. The system reads the data that needs to be replaced, reads the parity for the stripe, creates new parity, then writes the data and the parity. Applying the ratio of reads to writes, for every read request there are four read/write requests:

+ One-fifth of the I/Os are reads (1/5 of 720 I/Os = 144 I/Os for reads)

+ Four fifths of the I/Os are read/writes (4/5 of 720 I/Os = 576 I/Os for writes)

Therefore, 288 I/Os are available to the application (144 reads and 144 writes)

Assume that an application requires 1,000 I/Os per second with a 50:50 read/write ratio.

+ Which of the following nine options would be a possible solution?

+ Which solution would require the fewest number of disks?

Example

Consider an OLTP environment with the following sample workload:

+ 1,000 users

+ Six transactions per user per minute

+ Six I/Os per transaction

+ 2KB per I/O

To determine I/Os per minute, multiply 1,000 users x 6 transactions per user per minute x 6 I/Os per transactions = 36,000 I/Os per minute.

To calculate throughput per second, divide 36,000 I/Os per minute divided by 60 seconds = 600 I/Os per second.

Assuming the data rate is 2KB I/O, then 2KB per I/O x 600 I/Os per second = 1.2MB/s performance.

Disk caching affects performance in the following areas:

+ It reduces disk access (with cache hits).

+ It reduces the negative effects of the RAID overhead.

+ It assists in disk I/O request sorting and queuing.

0 comments

Post a Comment